Workflow Patterns

Parallel

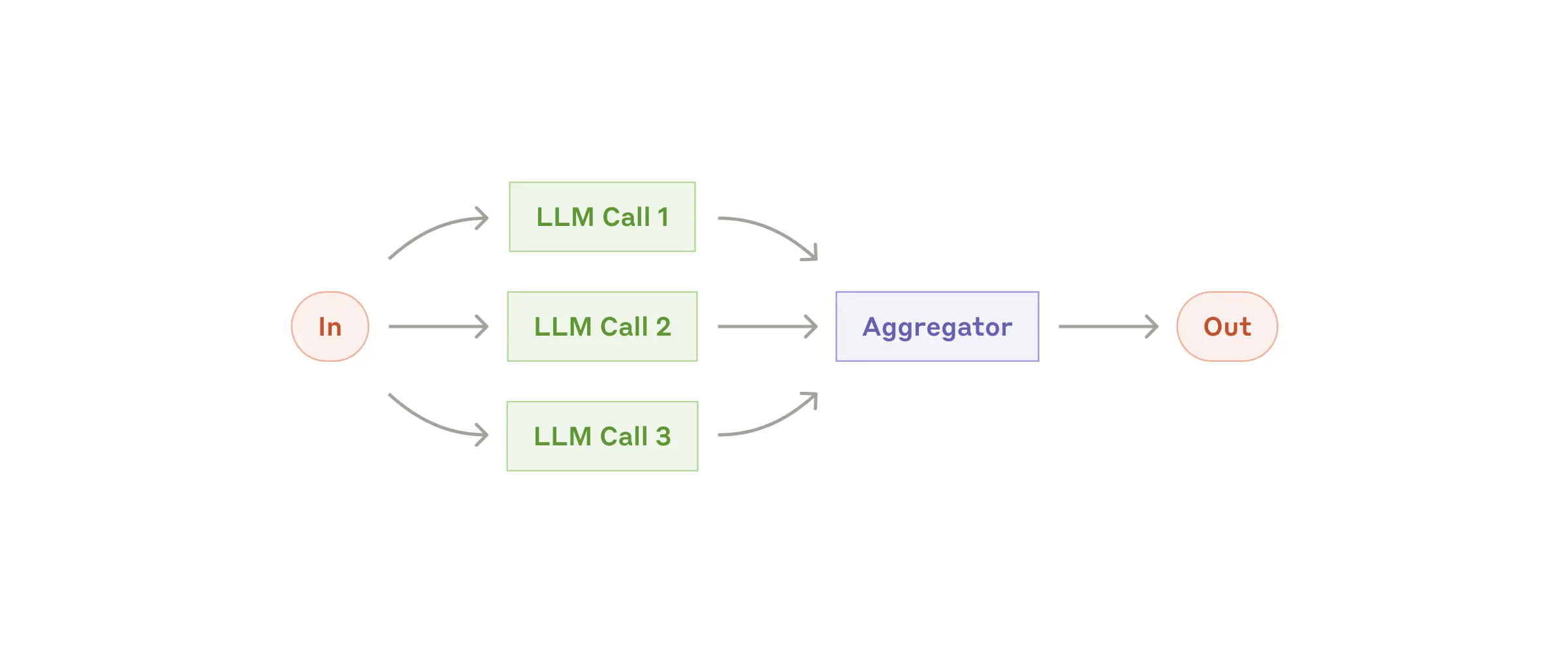

Execute multiple tasks simultaneously with intelligent result aggregation and conflict resolution.

Overview

The Parallel Workflow pattern uses a fan-out/fan-in approach where multiple agents work on different aspects of a task simultaneously, then a coordinating agent aggregates their results.Quick Example

Key Features

- Fan-Out Processing: Distribute work across multiple specialized agents

- Fan-In Aggregation: Intelligent result compilation and synthesis

- Concurrent Execution: All fan-out agents work simultaneously

- Specialized Roles: Each agent focuses on specific expertise areas

- Structured Output: Coordinated final result from aggregator agent

Use Cases

- Content Review: Multiple reviewers checking different aspects

- Multi-perspective Analysis: Getting diverse viewpoints on complex topics

- Quality Assurance: Parallel validation across different criteria

- Research Synthesis: Gathering information from multiple specialized sources

Full Implementation

See the complete parallel workflow example with student assignment grading use

case.