Workflow Patterns

Evaluator-Optimizer

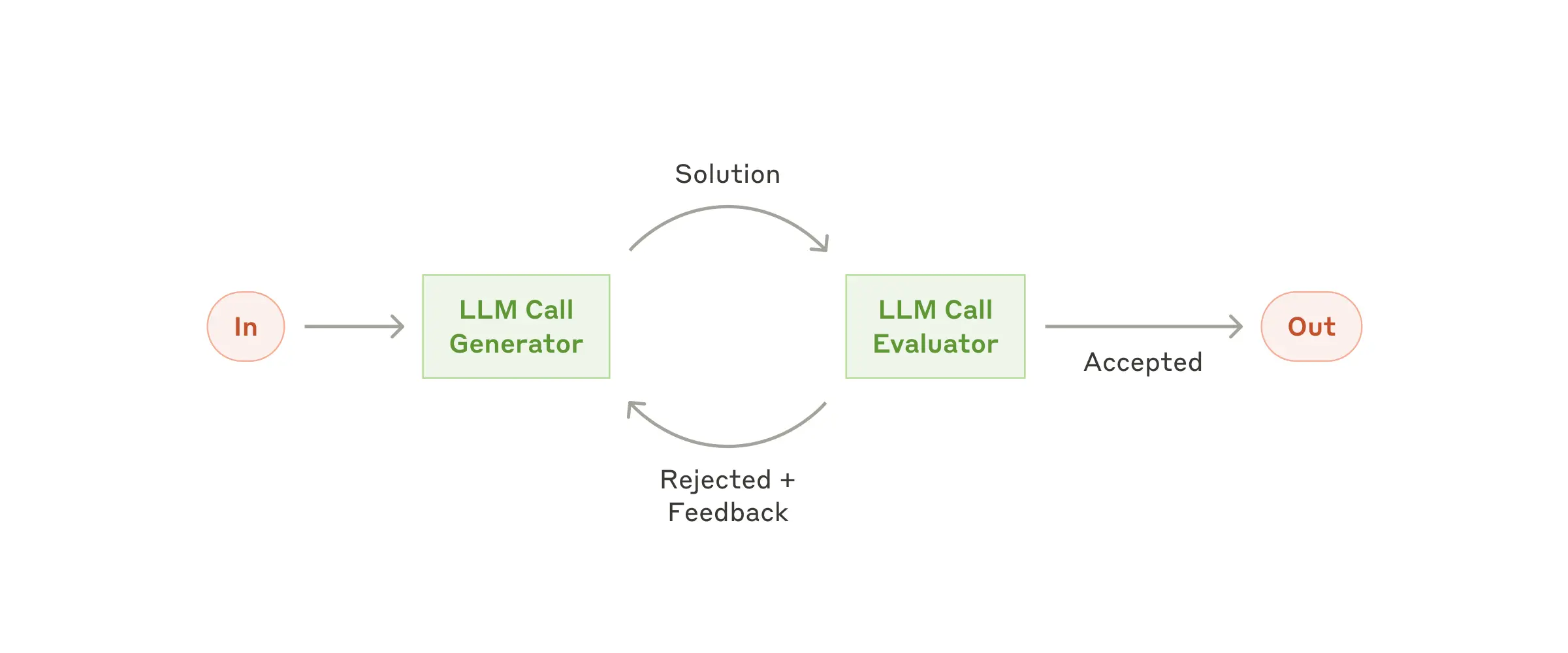

Quality control with LLM-as-judge evaluation and iterative response refinement.

Overview

The Evaluator-Optimizer pattern implements quality control through LLM-as-judge evaluation, iteratively refining responses until they meet specified quality thresholds.Quick Example

Key Features

- LLM-as-Judge: Automated quality evaluation using specialized evaluator agents

- Iterative Refinement: Multiple improvement cycles until quality threshold met

- Configurable Thresholds: Set minimum quality standards for different use cases

Use Cases

- Content Quality Control: Ensure documentation meets editorial standards

- Code Review Automation: Iteratively improve code quality and documentation

- Research Paper Refinement: Multi-pass improvement of academic writing

- Customer Communication: Refine responses for clarity and professionalism

Full Implementation

See the complete evaluator-optimizer implementation with research-based

quality metrics.